The Uncertainty Problem

I received criticism for pedalling the uncertainty trope in a presentation last year. The 'uncertainty trope' refers to the overuse or misrepresentation of uncertainty as a catch-all term, which can lead to a lack of clarity and actionable insights. The feedback was a gift. It initiated the deep thinking and reading that brought me to this blog. It was a journey where I got stuck, and this week, reading LinkedIn posts on using scenario planning as a way to deal with the generic term, uncertainty, an insight struck, and a bit of LLM wrangling helped me get unstuck.

We've all heard the platitudes: "The only certainty is uncertainty", "Uncertainty is the new normal", and "We've never lived in such uncertain times".

These phrases combine distinct challenges—epistemic gaps (such as a pharmaceutical company uncertain about the long-term side effects of a new drug due to limited clinical data), semantic fog (such as an engineering requirement where the term ‘reasonable effort’ is undefined, leading to legal disputes), and ontological mismatches (an AI ethics board where engineers define fairness mathematically, while policymakers see it as a social justice issue)—into a vague trope.

Yes, uncertainty has always existed, but in 2025, as AI governance, climate tipping points, and geopolitical fractures defy linear analysis, conflating kinds of uncertainty risks paralysis.

Uncertainty generalisations collapse distinct challenges into a vague, un-actionable trope.

When a colleague recently told me, “We're drowning in uncertainty”, I thought, “But what type of Uncertainty?” Some we can model, others we can't, and pretending they're the same is the problem.

The stakes are high. Misdiagnosing Uncertainty leads to mismatched tools. Applying probabilistic models to value conflicts or qualitative storytelling to quantifiable risks breeds systemic miscommunication.

I'm becoming acutely aware that generic depictions of uncertainty are insufficient, especially in scenario-planning activities.

Four Faces of Uncertainty

1. Epistemic Uncertainty - The Limits of Knowledge

Epistemic uncertainty arises from incomplete information or inadequate models. Imagine assessing whether a new quantum computing startup's claims are feasible. Even with expert interviews, gaps persist: proprietary algorithms, unproven hardware stability, or undisclosed partnerships. This uncertainty is reducible, in theory, with better data or expertise, as described by Fox & Ülkümen [1].

Yet reduction has limits. As Fox & Ülkümen note, epistemic uncertainty often intertwines with aleatory uncertainty, creating two-dimensional judgments where confidence in one's model (epistemic) qualifies assessments of stochastic outcomes (aleatory)[1]. For instance, a climate scientist might acknowledge a 70% chance of monsoon pattern shifts (aleatory) but attach low confidence to that estimate due to incomplete cloud-cover modelling (epistemic).

2. Aleatory Uncertainty - Chance and Randomness

Aleatory uncertainty, often called chance and randomness, arises from systems where variability is intrinsic—outcomes are governed by probabilistic processes rather than missing knowledge. Even with perfect information, the future remains unpredictable because randomness is baked into the system itself. This is the 'dice roll' of reality, where fluctuations, noise, and unpredictable variations persist regardless of how much we measure or model.

Think of cryptocurrency volatility, where price swings result from the complex, emergent behavior of countless market participants, or mutation rates in gene-editing trials, where molecular interactions follow probabilistic laws despite controlled lab conditions. Unlike epistemic uncertainty, which can shrink with better knowledge, aleatory uncertainty is irreducible but quantifiable—it can be modeled statistically but never fully eliminated.[1][2].

The Probabilistic Modified Trends (PMT) school of scenario planning tackles aleatory uncertainty by blending historical trends with expert-judged probabilities of disruptive events. For example, extrapolating energy demand curves while assigning likelihoods to fusion breakthroughs or regulatory shocks[2][4]. However, PMT struggles when epistemic uncertainty contaminates the baseline model, such as when historical data fails to capture novel AI-driven consumption patterns.

3. Normative Uncertainty - Values

Normative uncertainty concerns what we ought to do when ethical frameworks conflict. Should a city prioritise affordable housing or green space? Is longevity research more urgent than pandemic preparedness? These dilemmas involve irreducible uncertainty about values, not just facts[3][5].

MacAskill's work on maximising expected choice-worthiness attempts to systematise such decisions, analogising normative uncertainty to empirical risk[5]. But critics highlight incomparability: How do we weigh utilitarian ‘lives saved’ against ethics-based ‘rights preserved’?

Unlike PMT's statistical scaffolding, normative uncertainty resists quantification, demanding deliberative frameworks that speak to the complexity of moral decision-making and surface value trade-offs.

4. Ontological Uncertainty - World View

Ontological uncertainty is the elephant in the boardroom—often unnoticed but deeply consequential. It emerges when stakeholders unknowingly operate with conflicting mental models of fundamental concepts: what entities exist, how they relate, what causes what, and even what constitutes reality itself [9][10].

Unlike epistemic uncertainty, which stems from missing or incomplete knowledge, ontological uncertainty arises from incompatible ways of structuring and interpreting knowledge. For example, a legal team may view ‘data privacy’ as a regulatory compliance issue. At the same time, an AI engineer sees it as an optimsation problem—both working with fundamentally different assumptions about what ‘privacy’ means and how it should be addressed.

A 2024 study of AI ethics committees revealed stark ontological divides. Some members viewed ‘fairness’ as a statistical parity metric; others framed it as procedural equity in decision-making. These groups didn't disagree on data; they disagreed on what constituted ‘fairness’ as a category of existence [10]. This uncertainty is insidious because it masquerades as consensus; people use shared terms like ‘sustainability’ or ‘risk’ while projecting divergent meanings [9].

Scenario Planning: A Toolbox, Not a Panacea

The three main schools of scenario planning, Intuitive Logics, PMT, and La Prospective, each broadly align with different types of uncertainty:

PMT (Probabilistic Modified Trends) is best suited for domains dominated by aleatory uncertainty, such as infrastructure investment, where historical data can inform probabilistic models. For example, Monte Carlo simulations can help forecast 20-year energy portfolios, estimating the likelihood of breakthroughs in solar efficiency versus the risk of carbon tax policies collapsing.

Intuitive Logics addresses a mix of epistemic uncertainty (knowledge gaps) and normative uncertainty (value-laden decisions). It constructs plausible narratives to explore how different factors might interact. For instance, scenario planning for AI governance might examine how shifts in public trust and regulations around algorithmic transparency could shape the future.

La Prospective is most helpful in navigating deeply value-driven dilemmas where long-term adaptation is key. It helps decision-makers explore pathways in issues like balancing urban densification with the preservation of cultural heritage, where trade-offs are not just technical but also deeply societal.

But there are pitfalls. A 2024 study critiqued PMT's overreliance on ‘expert judgment,’ noting anchoring biases in probability assignments for black-swan events[6]. Similarly, Intuitive Logics' qualitative scenarios risk glossing over quantifiable risks when epistemic uncertainty is low.

Navigating Uncertainty

Uncertainty is a spectrum of challenges requiring tailored responses; consider the following steps when navigating uncertainty (biased by my interest in scenarios):

Map the uncertainty type first. Is it a gap in knowledge (epistemic), inherent randomness (aleatory), differing worldviews (ontological), or conflicting values (normative)?

Match methods to the matrix. Use PMT for aleatory modelling, public deliberation for normative clashes, and hybrid approaches for intertwined uncertainties.

Embrace meta-uncertainty. Acknowledge when classifications themselves are fuzzy. For example, AI ethics debates blending normative uncertainty (How much autonomy to grant machines?) with epistemic unknowns (How will recursive self-improvement unfold?).

I've still got a problem with Uncertainty!

Uncertainty is not a singular concept. It is plural, multifaceted, and complex. There is no one-size-fits-all approach to dealing with uncertainty, but we can work with it by taking a diagnostic stance. We must understand and adopt the appropriate tools, advocating for a nuanced approach, especially in scenario planning.

Next time someone complains, “Everything's so uncertain”, ask: Which part, what kind?

So, why do I still have a problem with uncertainty? Writing this blog has surfaced many unknown-unknowns, which have now become known-unknowns. In other words, it feels like I know less than when I started.

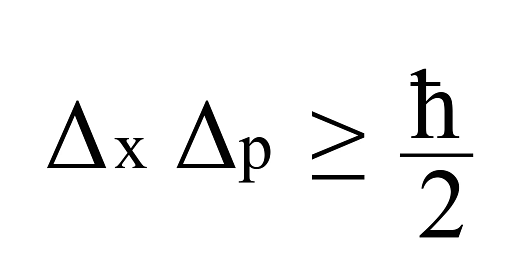

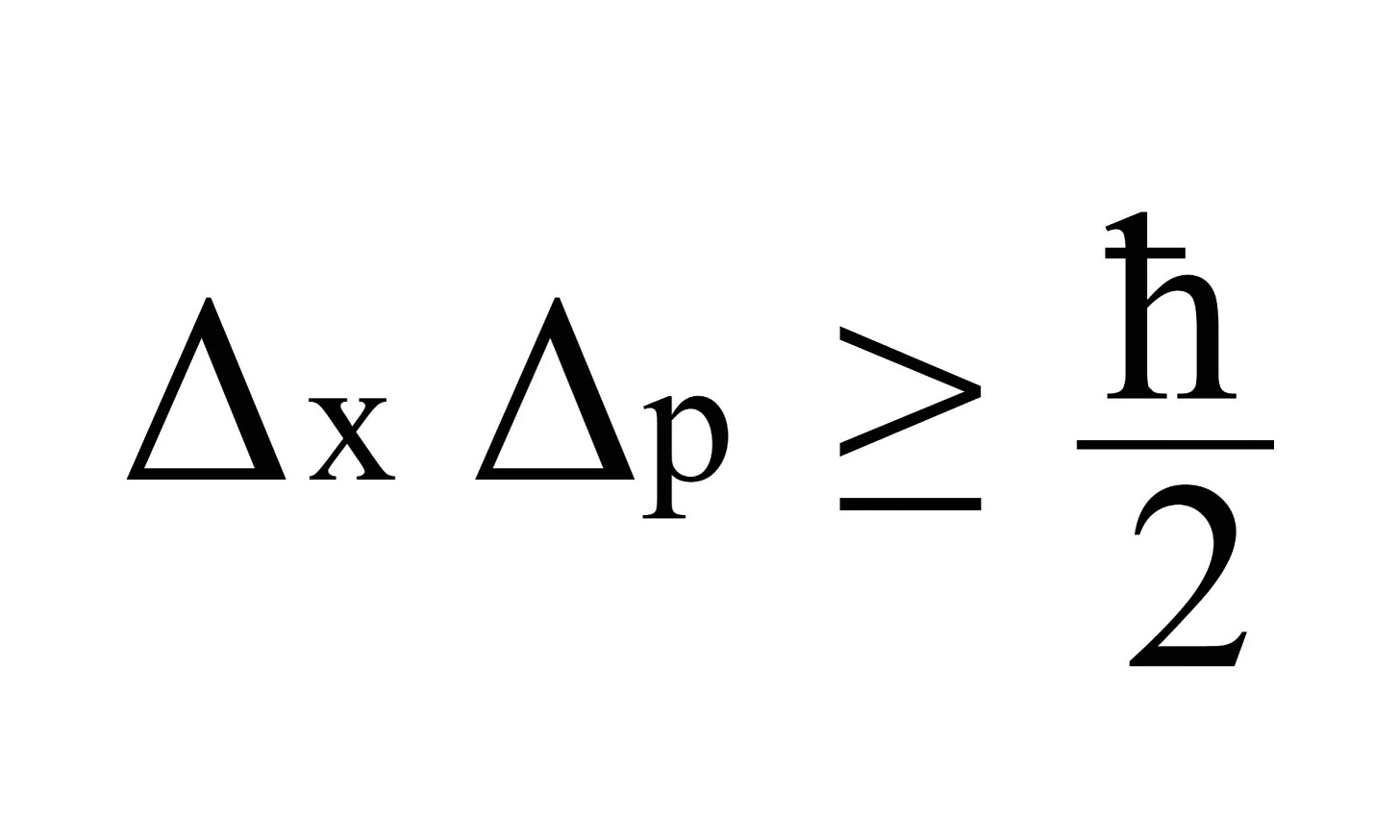

I haven't even touched on ambiguity, complex uncertainty, deep uncertainty, the unknowable or the manifestations of uncertainties across political, environmental, technological, and psychological contexts. I haven’t scratched my inner physicist’s itch around the Heisenberg Uncertainty Principle.

My epistemic uncertainty has grown!

I've been keeping raw notes on uncertainty in Notion, and I'm happy to share the page on request.

And who knows, there might be enough material for another ‘occasional’ blog soon.

Let's have the economists chime in. In decision-making theory, uncertainty refers to a specific situation where risk cannot be evaluated. The classical field of decision-making (DM) theory relied on weighing various possible outcomes and optimizing for expected value, thus reducing your type 2 uncertainty--which is usually just called "risk".

However, behaviorists and cognitive scientists were soon to realize that human beings do, in fact, not readily compute expected utilities (hence 95% of the micro-economic neoliberal doxa is plain wrong btw but that is another story, thanks Kahneman & Tversky). Rather, we display an innate aversion for uncertainty: even when it does not make sense from an expected utility point of view, we tend to prefer situations that we can read and understand rather than situations that are fuzzy and ill-formed. A bird in the hand is, quite literally, worth two in the bush...

When designing DM frameworks (think resources allocation mechanisms, insurance contracts etc.), marketing was very swift at taking advantage of this bias (think yield management...)